Chapter 7 Linear Independence

One of the most central ideas in all of Linear Algebra is that of linear independence. For regression problems, it is repeatedly stressed that multicollinearity is problematic. Multicollinearity is simply a statistical term for linear dependence. It’s bad. Having a firm understanding of linear combinations, we can develop the important concept of linear independence.

7.1 Linear Independence

Definition 7.1 (Linear Dependence and Linear Independence) A set of vectors \(\{\v_1,\v_2,\dots, \v_n\}\) is linearly dependent if we can express the zero vector, \(\bo{0}\), as non-trivial linear combination of the vectors. In other words there exist some constants \(\alpha_1,\alpha_2,\dots \alpha_n\) (non-trivial means that these constants are not all zero) for which \[\begin{equation} \alpha_1\v_1 +\alpha_2\v_2 + \dots +\alpha_n\v_n=\bo{0}. \tag{7.1} \end{equation}\]

A set of terms is linearly independent if Equation (7.1) has only the trivial solution, \[\alpha_1=\alpha_2=\dots=\alpha_n = 0.\]

Another way to express linear dependence is to say that we can write one of the vectors as a linear combination of the others. If there exists a non-trivial set of coefficients \(\alpha_1,\alpha_2, \dots, \alpha_n\) for which \[\alpha_1\v_1 +\alpha_2\v_2 + \dots +\alpha_n\v_n=\bo{0}\] then for \(\alpha_j \neq 0\) we could write \[\v_j=-\frac{1}{\alpha_j} \sum_{\substack{i=1\\i \neq j}}^n \alpha_i \v_i\]

Example 7.1 (Linearly Dependent Vectors) The vectors \(\v_1 =\pm 1\\2\\2 \mp, \v_2 = \pm 1\\2\\3 \mp, \mbox{ and } \v_3 = \pm 3\\6\\7 \mp\) are linearly dependent because \[\v_3 = 2\v_1+\v_2\] or, equivalently, because \[2\v_1+\v_2-\v_3 = \bo{0}\]

7.1.1 Determining Linear Independence

You should realize that the linear combination expressed Definition @ref{def:linind} can be written as a matrix vector product. Let \(\A_{m\times n} = (\A_1|\A_2|\dots|\A_n)\) be a matrix. Then by Definition @ref{def:linind}, the columns of \(\A\) are linearly independent if and only if the equation \[\begin{equation} \A\x = \bo{0} \tag{7.2} \end{equation}\] has only the trivial solution, \(\x=0\). Equation (7.2) is commonly known as the homogeneous linear equation. For this equation to have only the trivial solution, it must be the case that under Gauss-Jordan elimination, the augmented matrix \((\A|\bo{0})\) reduces to \((\bo{I}|0)\). We have already seen this condition in our discussion about matrix inverses - if a square matrix \(\A\) reduces to the identity matrix under Gauss-Jordan elimination then it is equivalently called full rank, nonsingular, or invertible. Now we add an additional condition equivalent to the others - the matrix \(\A\) has linearly independent columns (and rows).

In Theorem 7.1 we provide an important list of equivalent conditions regarding the existence of a matrix inverse.

Theorem 7.1 (Equivalent Conditions for Matrix Invertibility) Let \(\A\) be an \(n\times n\) matrix - a square matrix. Then the following statements are equivalent. (If one these statements is true, then all of these statements are true)

- \(\A\) is invertible (\(\A^{-1} exists\))

- \(\A\) has full rank (\(rank(\A)=n\))

- The columns of \(\A\) are linearly independent

- The rows of \(\A\) are linearly independent

- The system \(\A\x=\b\), \(\b\neq \bo{0}\) has a unique solution

- \(\A\x=\bo{0} \Longrightarrow \x=\bo{0}\)

- \(\A\) is nonsingular

- \(\A \xrightarrow{Gauss-Jordan} \bo{I}\)

7.2 Span of Vectors

Definition 7.2 (Vector Span) The span of a single vector \(\v\) is the set of all scalar multiples of \(\v\): \[span(\v)=\{\alpha\v\ \mbox{ for any constant } \alpha\}\] The span of a collection of vectors, \(\V=\{\v_1,\v_2,\dots,\v_n\}\) is the set of all linear combinations of these vectors: \[span(\V)=\{\alpha_1\v_1+\alpha_2\v_2+\dots+\alpha_n\v_n \mbox{ for any constants }\alpha_1,\dots,\alpha_n\}\]

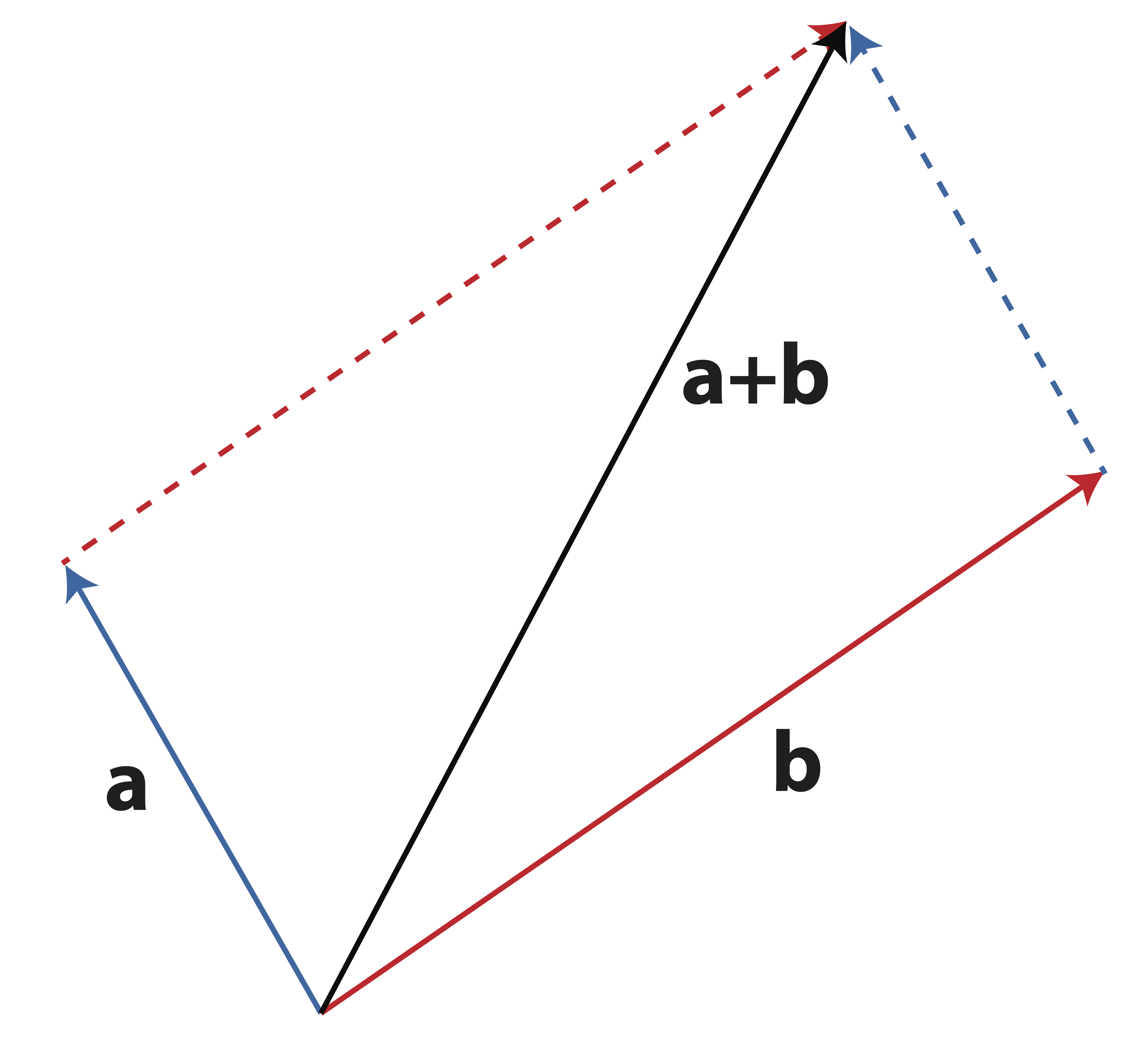

Recall that addition of vectors can be done geometrically using the head-to-tail method shown in Figure 7.1.

Figure 7.1: Geometrical addition of vectors: Head-to-tail

If we have two linearly independent vectors on a coordinate plane, then any third vector can be written as a linear combination of them. This is because two vectors is sufficient to span the entire 2-dimensional plane. You should take a moment to convince yourself of this geometrically, using the animation in Figure 7.2 to help.

Figure 7.2: Animation: Span of Two Linear Independent Vectors is a Plane.

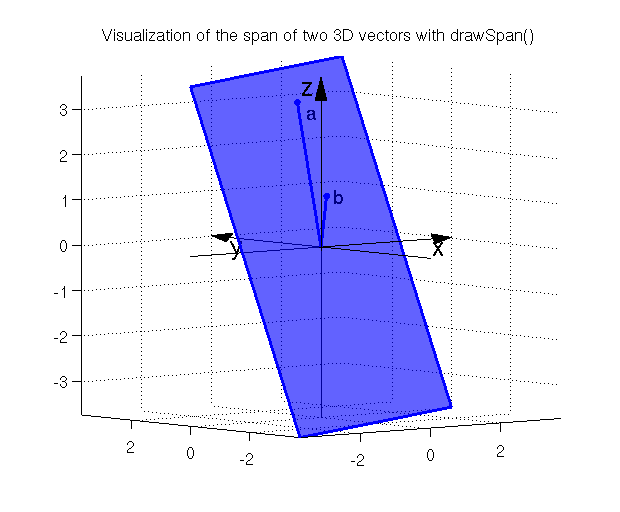

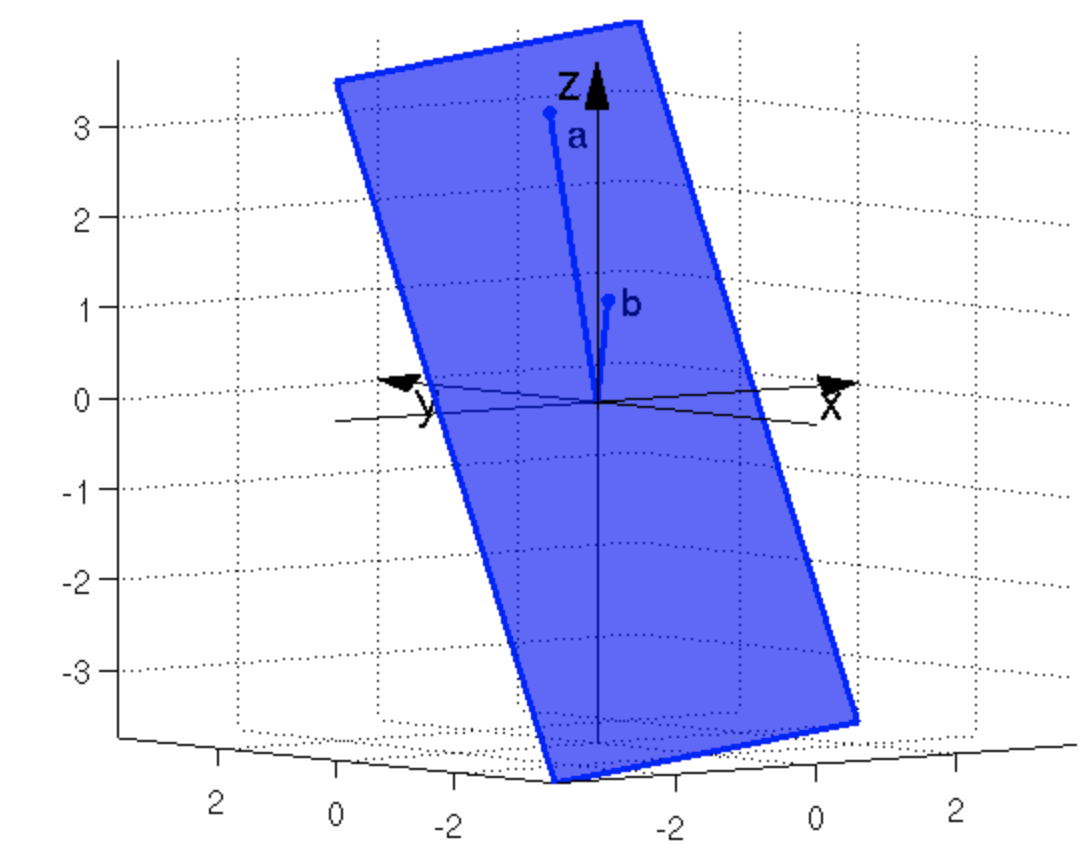

In 3-space, two linearly independent vectors can still only span a plane. Figure 7.3 depicts this situation. The set of all linearly combinations of the two vectors \(\a\) and \(\b\) (i.e. the \(span(\a,\b)\)) carves out a plane. We call this a two-dimensional collection of vectors a subspace of \(\Re^3\). A subspace is formally defined in Definition 7.3.

Figure 7.3: The \(span(\a,\b)\) in \(\Re^3\) creates a plane (a 2-dimensional subspace)

Definition 7.3 (Subspace) A subspace, \(\mathcal{S}\) of \(\Re^n\) is thought of as a “flat” (having no curvature) surface within \(\Re^n\). It is a collection of vectors which satisfies the following conditions:

- The origin (\(\bo{0}\) vector) is contained in \(\mathcal{S}\)

- If \(\x\) and \(\y\) are in \(\mathcal{S}\) then the sum \(\x+\y\) is also in \(\mathcal{S}\)

- If \(\x\) is in \(\mathcal{S}\) and \(\alpha\) is a constant then \(\alpha\x\) is also in \(\mathcal{S}\)

The span of two vectors \(\a\) and \(\b\) is a subspace because it satisfies these three conditions. (Can you prove it formally? See exercise 4).

Example 7.2 (Span) Let \(\a=\pm 1\\3\\4 \mp\) and \(\b=\pm 3\\0\\1 \mp\). Explain why or why not each of the following vectors is contained in the \(span(\a,\b)\)?

- \(\x=\pm 5\\6\\9 \mp\)

- To determine if \(\x\) is in the \(span(\a,\b)\) we need to find coefficients \(\alpha_1, \alpha_2\) such that \[\alpha_1\a+\alpha_2\b=\x.\] Thus, we attempt to solve the system \[\pm 1&3\\3&0\\4&1 \mp \pm \alpha_1\\ \alpha_2 \mp = \pm 5\\6\\9\mp.\] After Gaussian Elimination, we find that the system is consistent with the solution \[\pm\alpha_1\\ \alpha_2 \mp=\pm 2\\1\mp\] and so \(\x\) is in fact in the \(span(\a,\b)\).

- \(\y=\pm 2\\4\\6 \mp\)

- We could follow the same procedure as we did in part (a) to learn that the corresponding system is not consistent and thus that \(\y\) is not in the \(span(\a,\b)\).

Definition 7.4 (Hyperplane) A hyperplane is a subspace with 1 less dimension than the ambient space. Such a space “cuts” the ambient space into two pieces, one that is “above” the hyperplane, and one that is “below” it. Hyperplanes are not always defined to go through the origin, so we will distinguish these subspaces from affine hyperplanes, which are not subspaces.

7.3 Exercises

-

Six views of matrix multiplication: This notational exercise turns out to contain one of (well, six of) the most fundamentally important concepts to grasp for applied linear algebra. We must be able to intuitively create matrix multiplications from every angle in order for us to be strong practitioners. This is not something that we develop immediately. It comes through practice, visualization, and experience. Do not skip this exercise and keep it in your pocket as you proceed through this book.

Let \(\A_{m\times k}\), \(\B_{k\times n}\), and \(\C_{m\times n}\) be matrices such that \[\A\B=\C.\]- Express the first column of \(\C\) as a linear combination of the columns of \(\A\).

- Express the first column of \(\C\) as a matrix-vector product.

- Express \(\C\) as a sum of outer products.

- Express the first row of \(\C\) as a linear combination of the rows of \(\B\).

- Express the first row of \(\C\) as a matrix-vector product.

- Express the element \(\C_{ij}\) as an inner product of row or column vectors from \(\A\) and \(\B\).

- Determine whether or not the vectors \[\x_1=\pm 1\\3\\1\mp,\x_2=\pm 0\\1\\1\mp,\x_3=\pm 2\\1\\0\mp\] are linearly independent.

-

Let \(\a=\pm 1\\3\\4 \mp\) and \(\b=\pm 3\\0\\1 \mp\).

- Show that the zero vector, \(\pm 0\\0\\0 \mp\) is in the \(span(\a,\b)\).

- Determine whether or not the vector \(\pm 1\\0\\1 \mp\) is in the \(span(\a,\b)\).

-

Which of the following sets of variables has a clear problem with linear dependence? Explain your reasoning.

-

A garden center that stocks products on hundreds of hand-built rectangular display tables, all in varying sizes, has data that contains:

- Table Number

- Length of table

- Width of Table

- Table Perimeter

- Area of Table

-

Apple farmer tracking his product has data containing:

- Truck number

- Number of pallets on truck

- Number of boxes per pallet

- Total number of boxes on truck

-

A triathlon race company collects the following information from each participant:

- Name

- Swim time

- Bike time

- Run time

- Total race time

-

A software company keeps the following payroll data for its salaried employees:

- Employee ID

- Annual Salary (sans bonus)

- Tenure at company

- Gross monthly pay

- Bonus amount

-

A garden center that stocks products on hundreds of hand-built rectangular display tables, all in varying sizes, has data that contains:

- Describe the span of one vector in \(\Re^3\).

- Describe the span of two linearly independent vectors in \(\Re^3\).

- Describe the span of two linearly dependent vectors in \(\Re^3\).

- What is the span of the zero vector, \(\bo{0}=(0,0,\dots, 0)\)?

- Compare the \(span \left\lbrace \pm 1\\1 \mp \right\rbrace\) to the \(span \left\lbrace \pm 1\\1 \mp , \pm 2\\2 \mp \right\rbrace\).

- What is the dimension of the \(span \left\lbrace\pm 1\\1 \mp , \pm 2\\2 \mp \right\rbrace\)

- What is the definition of the dimension of a subspace?

- How would you describe a hyperplane?

- Draw the \(span(\a,\b)\) if \(\a=\pm 1\\2 \mp\) and \(\b=\pm 3\\6 \mp\).

- Prove that the span of vectors is a subspace by showing that it satisfies the three conditions from Definition 7.2. To make a formal proof, the strategy should be as follows: (1) Take two arbitrary elements from the span and show that when you add them together, the resulting vector is also in the span. (2) Take one arbitrary vector from the span and show that when you multiply it by a constant, the resulting vector is also in the span. (3) Show that the zero vector is contained in the span. You can simply show this fact for the span of two vectors and notice how the concept will hold for more than two vectors.

-

True/False Mark each statement as true or false. Justify your response.

- If \(\A\x=\b\) has a solution then \(\b\) can be written as a linear combination of the columns of \(\A\).

- If \(\A\x=\b\) has a solution then \(\b\) is in the span of the columns of \(\A\).

- If \(\v_1\) is in the \(span(\v_2,\v_3)\), then \(\v_1, \v_2, \,\mbox{ and},\v_3\) form a linearly independent set.

- Two vectors are linearly independent only if they are not perfectly correlated, \(-1<\rho<1\), where \(\rho\) is Pearson’s correlation coefficient.

-

Let \(\x = \left(\begin{array}{c} 2\\0\\1 \end{array}\right)\), \(\y = \left(\begin{array}{c} 1\\1\\2 \end{array}\right)\), and \(\z = \left(\begin{array}{c} 3\\-1\\0 \end{array}\right)\).

- Are the vectors \(\x, \y, \z\) linearly independent?

- What is the rank of the matrix that contains these 3 vectors as columns?

- What is the rank of the matrix that contains these 3 vectors as rows?

List of Key Terms

- linearly independent

- linearly dependent

- full rank

- perfect multicollinearity

- severe multicollinearity

- invertible

- nonsingular

- linear combination geometrically

- linear (in)dependence geometrically

- vector span

- subspace

- dimension of subspace

- hyperplane